Regardless of size, every organization grapples with managing, accessing, and interpreting data. Such challenges can obstruct sound decision-making. Historically, solutions have included analytics platforms, dedicated teams of analysts, and developers. While effective, these come with constraints—both financial and human. These constraints often extend the Time to Insight (TTI), which is the gap between posing a question and receiving actionable answers.

In smaller, agile organizations, TTI is often minimal due to direct communication, swift decision-making, and immediate access to data. Yet, as they grow, complexities arise. Increased data volume, intricate communication paths, a rising number of decision-makers, and emerging knowledge silos can balloon the TTI. Consequently, the once-quick insight extraction becomes a drawn-out process.

The overarching question is: How can the efficiency of smaller teams be scaled organization-wide, and how can TTI be minimized for everyone? This quandary branches into three core domains:

Collection – How do we effectively gather relevant data?

Categorization – How can we organize and showcase this data in meaningful groups?

Inference – How can we make informed decisions from the data?

At CosoLogic, we’re keen on orchestrating the domains of Collection, Categorization, and Inference. While exploring each in future posts, this piece zeroes in on Inference.

Historically, knowledge management varied in sophistication. We’ve seen everything from tools like SharePoint, Excel, and databases to dashboards and the knowledge trapped in colleagues’ minds. But they had a shared drawback: users needed to navigate the maze of where and how to source their answers.

What has changed?

Traditionally, deciphering these requests often needed a human touch, understanding the data’s location and method to retrieve it (SQL, API, file searches). Such manual interventions brought along delays and other human-centric challenges.

Enter Generative AI. It not only grasps user queries but also offers on-the-spot answers by intelligently interfacing with our data. Imagine having a domain expert on every user’s side – that’s the power of this AI. Everyone can now tap into a 24/7 AI assistant, ready and equipped with the organization’s entire knowledge base.

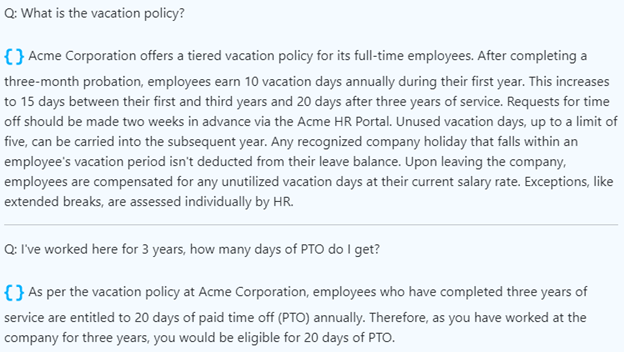

Large Language Models (LLMs) enable users to move beyond mere keyword searches, understanding context and intent. Consider questions about time off: even without using the term “vacation”, relevant information can be retrieved. This represents a transformative leap from conventional search techniques.

Queries might include:

- What is the vacation policy?

- How much PTO do I have?

- What’s the policy on time off?

- What’s the protocol for personal days?

Though HR professionals can handle such queries, conventional search tools might falter. The human touch is irreplaceable if data is missing or not in the knowledge base. SMEs curate and safeguard knowledge. And, sometimes, even AI may not have the answer. In such cases, AI can guide users to human experts, suggest sources, or prompt users to contribute knowledge.

We’re just getting started…

AI isn’t the ultimate answer to data inference, but reducing TTI has clear benefits in boosting productivity. Time saved becomes a resource, redirecting focus to organizational goals. AI’s search and Q&A capabilities are just the tip of the iceberg; with LLMs, the horizon expands to better data extraction, workflow, and API integration.

CosoLogic is at the forefront, partnering with leaders in the field. Our mission? Seamless integration of these tools into organizational structures, amplifying productivity, and ensuring everyone reaps their benefits, steering the organization towards unparalleled growth and success. Interested? Reach out through our “Contact Us” option.